Key Highlights:

- Big cloud players now openly compete with Nvidia’s AI dominance.

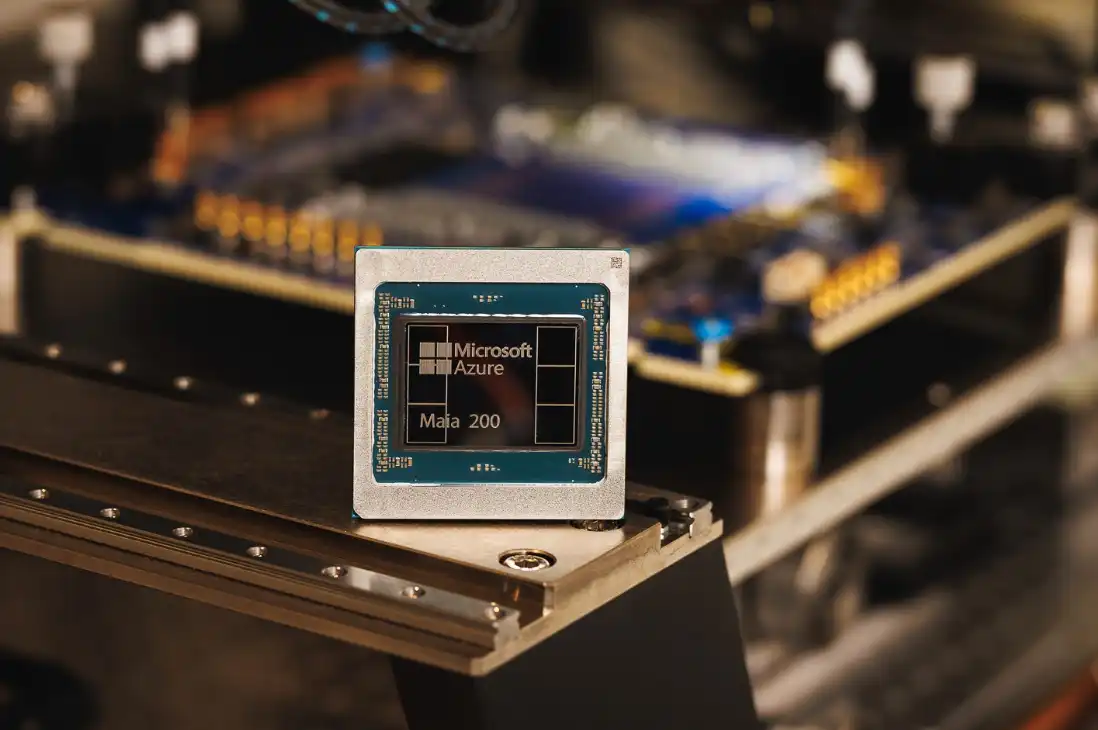

- Microsoft unveils Maia 200, its second-generation in-house AI chip.

- The chip goes live in Iowa this week, with Arizona next.

- Microsoft pairs Maia 200 with Triton, challenging Nvidia’s CUDA software moat.

Microsoft has unveiled the Maia 200, the second generation of its in-house AI chip, alongside new software tools designed to rival Nvidia’s dominance. The move matters because it targets Nvidia’s biggest strength — its developer ecosystem — and signals a deeper shift in how cloud giants build and control AI infrastructure.

The Maia 200 goes live this week in a Microsoft data center in Iowa. A second deployment is planned in Arizona. It follows the original Maia chip introduced in 2023 and places Microsoft in direct competition with Nvidia, whose GPUs power most of today’s AI workloads.

Across the cloud industry, the pattern is clear. Microsoft, Google, and Amazon now build their own AI chips. All are among Nvidia’s biggest customers. All now want independence.

Why is Microsoft building its own AI chips?

The answer is control. AI demand is exploding. Training and serving large models is expensive. By designing its own silicon, Microsoft can tune hardware for its workloads, manage costs, and reduce reliance on external suppliers.

The Maia 200 is manufactured by TSMC using 3-nanometer technology, the same advanced process used for Nvidia’s upcoming “Vera Rubin” chips. It uses high-bandwidth memory, though an older generation than Nvidia’s newest parts.

Microsoft also packed the chip with a large amount of SRAM. This fast on-chip memory helps AI systems respond quickly when many users query a chatbot at once. Competitors like Cerebras and Groq use the same strategy.

How Microsoft is challenging Nvidia’s software moat

Hardware alone is not the real battle. Nvidia’s biggest advantage is CUDA, the software platform developers use to build AI models. It locks workloads to Nvidia chips.

Microsoft now counters that with Triton, an open-source programming tool developed with major contributions from OpenAI. Triton performs similar tasks to CUDA but works across different hardware.

This is a direct strike at Nvidia’s strongest wall.

If developers can write models in Triton and run them efficiently on Maia chips, the cost of switching away from Nvidia drops. That changes the balance of power in AI infrastructure.

A wider industry shift

Google already offers its Tensor Processing Units. Amazon has Trainium and Inferentia. Meta is exploring alternatives. Even Nvidia’s largest customers now hedge.

The Maia 200 shows that Microsoft is no longer just a cloud provider. It is becoming a full-stack AI platform — from chips to software to models.

The race is no longer only about faster GPUs. It is about ecosystems.

And with Maia 200 and Triton, Microsoft is signaling that it wants to own both.