Codex Unrolled: Inside the Agent Loop That Powers OpenAI’s AI Coding System

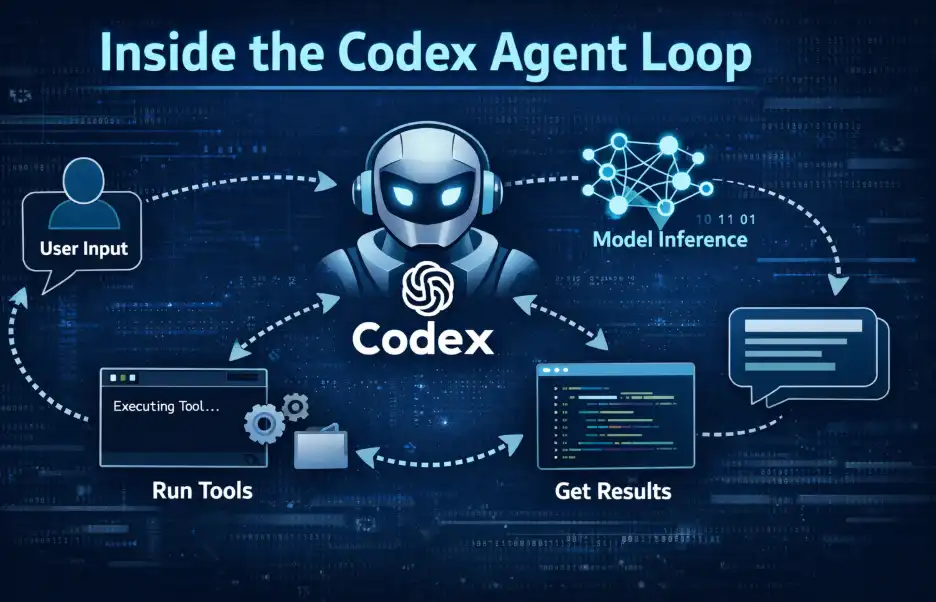

OpenAI has published a deep technical breakdown of how Codex works, revealing the “agent loop” that powers its AI-driven coding system. The post explains how Codex turns user instructions into real software actions by coordinating between the model, tools, and local environments.

This matters because Codex is no longer just a chatbot that writes code. It is a full software agent. It can inspect files, run commands, edit projects, and return with a final result. Understanding this loop explains why modern AI tools feel less like assistants and more like collaborators.

At the center of Codex is a simple cycle. First, it takes user input and builds a structured prompt. Next, it queries the model. The model either replies directly or asks to run a tool. If it calls a tool, Codex executes it, adds the output back into the prompt, and queries the model again. This continues until the model produces a final assistant message.

Every round of this process is called a “turn.” A single turn can include dozens of tool calls. The visible message at the end is only the surface. Most of the real work happens inside this loop.

How does the agent loop actually work?

Codex uses OpenAI’s Responses API to run inference. Instead of sending raw text, it sends structured inputs. These include system rules, developer instructions, environment details, and the user’s message.

Each piece has a role. System messages carry the highest priority. Developer messages define guardrails. User messages describe the task. Assistant outputs and tool results get appended over time.

This design ensures that every new request builds on the entire conversation. The previous prompt becomes a prefix of the next one. That allows Codex to reuse context efficiently and keep long sessions coherent.

When the model requests a tool, Codex runs it. This can be a shell command, a file read, or an external MCP tool. The result flows back into the prompt. The model then reasons with fresh data.

Why does context management matter?

Every model has a context window. It limits how much text the system can hold at once. Since Codex can make hundreds of tool calls in one task, the prompt can grow quickly.

Codex manages this growth carefully. It keeps the prompt structured and incremental. This allows it to maintain continuity while staying within limits. It also enables prompt caching, which makes repeated queries faster and cheaper.

Without this layer, an AI agent would lose track of what it did. Codex avoids that by making every step explicit.

Codex shows how AI is shifting from “answering questions” to “doing work.” The agent loop turns language into action. It makes the model a real participant in software development. As Codex expands across tools and platforms, this loop becomes the blueprint for how future AI agents will operate.