A New Phase for Medical AI

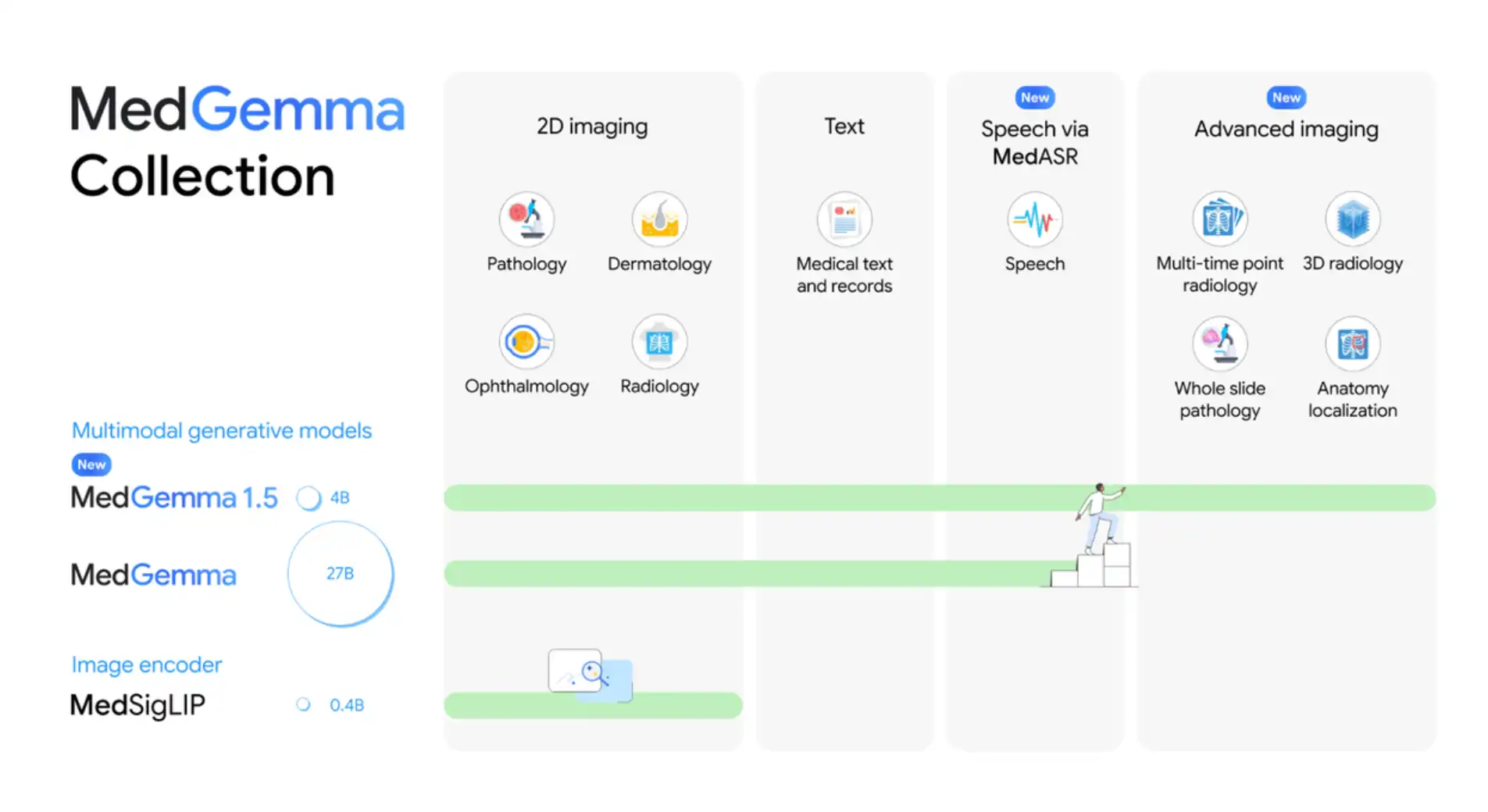

Google has unveiled MedGemma 1.5, an upgraded open medical AI model designed for real-world healthcare tasks. This update expands AI beyond simple images. It now understands CT scans, MRI volumes, and full pathology slides.

At the same time, Google has launched MedASR, a medical speech-to-text model built for doctor dictation. Together, they bring text, images, and voice into one AI workflow.

The goal is simple. Help developers build better healthcare tools. And do it with open models.

What Makes MedGemma 1.5 Different

MedGemma 1.5 improves how AI reads complex medical data. It can now analyze three-dimensional scans and multi-slice images. Developers can feed CT or MRI slices and ask medical questions.

The model shows clear gains. It improves CT disease classification by 3 percent. MRI accuracy jumps by 14 percent. In pathology tasks, it moves from near-zero fidelity to match specialized models.

It also gets better at daily hospital needs. It localizes anatomy in chest X-rays. It reviews image timelines. It extracts data from lab reports. Each task sees double-digit gains.

For text, MedGemma 1.5 answers medical questions more accurately. It also retrieves data from electronic health records with far higher precision.

MedASR Brings Doctors’ Voices to AI

Typing remains slow in clinical settings. MedASR changes that.

This new model converts medical speech into text with far fewer errors. On chest X-ray dictation, it cuts mistakes by more than half compared to general speech models. On broader medical speech, errors drop by over 80 percent.

Doctors can now speak. MedASR transcribes. MedGemma reasons. The loop feels natural.

Open Models, Real Impact

All these models remain open. Developers can run them offline or scale them on Google Cloud. They are free for research and commercial use.

To spark adoption, Google has launched the MedGemma Impact Challenge on Kaggle with $100,000 in prizes. The aim is clear. Turn these models into tools that improve care.

MedGemma 1.5 signals a shift. Medical AI is no longer limited to text or flat images. It now sees scans. It hears doctors. And it moves closer to clinical reality.