Key Highlights:

- Nvidia officially unveils its next-generation Rubin architecture at CES

- Rubin enters full production and targets massive AI compute growth

- The platform promises major gains in training speed and inference efficiency

- Top cloud players and supercomputers are already lining up for Rubin

At the Consumer Electronics Show, Nvidia revealed its most ambitious computing platform yet. CEO Jensen Huang formally launched the Rubin architecture, calling it the company’s new state of the art in AI hardware. According to Nvidia, Rubin is already in full production and will scale further in the second half of the year.

The launch highlights how quickly AI compute demands are rising. As models grow larger and workflows become more complex, hardware must evolve just as fast.

Why Rubin Matters for the AI Era

Rubin is designed to handle the exploding need for computation across AI training and inference. Huang said the amount of compute required for AI is “skyrocketing,” and Rubin directly targets that challenge.

The architecture will succeed Blackwell, which itself replaced Hopper and Lovelace. This rapid cadence shows how Nvidia continues to refresh its platforms to stay ahead of AI workloads.

Built for the World’s Biggest AI Players

Rubin chips are already planned for use across major cloud platforms. Nvidia confirmed partnerships involving Anthropic, OpenAI, and Amazon Web Services. Beyond the cloud, Rubin systems will also power HPE’s Blue Lion supercomputer and the upcoming Doudna system at Lawrence Berkeley National Lab.

This wide adoption signals strong confidence in the platform even before large-scale deployment begins.

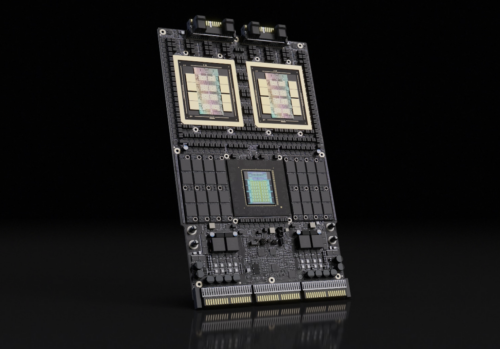

A Six-Chip Architecture Focused on Balance

Named after astronomer Vera Rubin, the architecture combines six chips working together. At its core sits the Rubin GPU. However, Nvidia also addresses long-standing bottlenecks beyond raw compute.

The platform introduces advances in BlueField networking and NVLink interconnects. It also adds a new Vera CPU, which is designed to support agentic reasoning and long-running AI tasks.

Smarter Storage for Agentic AI

Modern AI systems increasingly rely on memory-heavy workflows. Nvidia says agentic AI and long-term reasoning place heavy pressure on key-value cache memory.

To solve this, Rubin introduces a new external storage tier. This allows AI systems to scale memory pools more efficiently while reducing strain on the compute device itself.

Performance Gains That Redefine Scale

Nvidia claims that the new platform delivers significant efficiency gains. The company says the platform is up to 3.5 times faster than Blackwell for model training. For inference tasks, the gain rises to five times.

At peak, Rubin can reach up to 50 petaflops. It also supports eight times more inference compute per watt, which is critical as power becomes a limiting factor for AI infrastructure.

The Bigger AI Infrastructure Race

Rubin arrives amid intense global competition for AI hardware. On a recent earnings call, Huang estimated that $3 trillion to $4 trillion could be spent on AI infrastructure over the next five years.

With Rubin, Nvidia positions itself to power much of that growth.