A Personal AI in Your Pocket

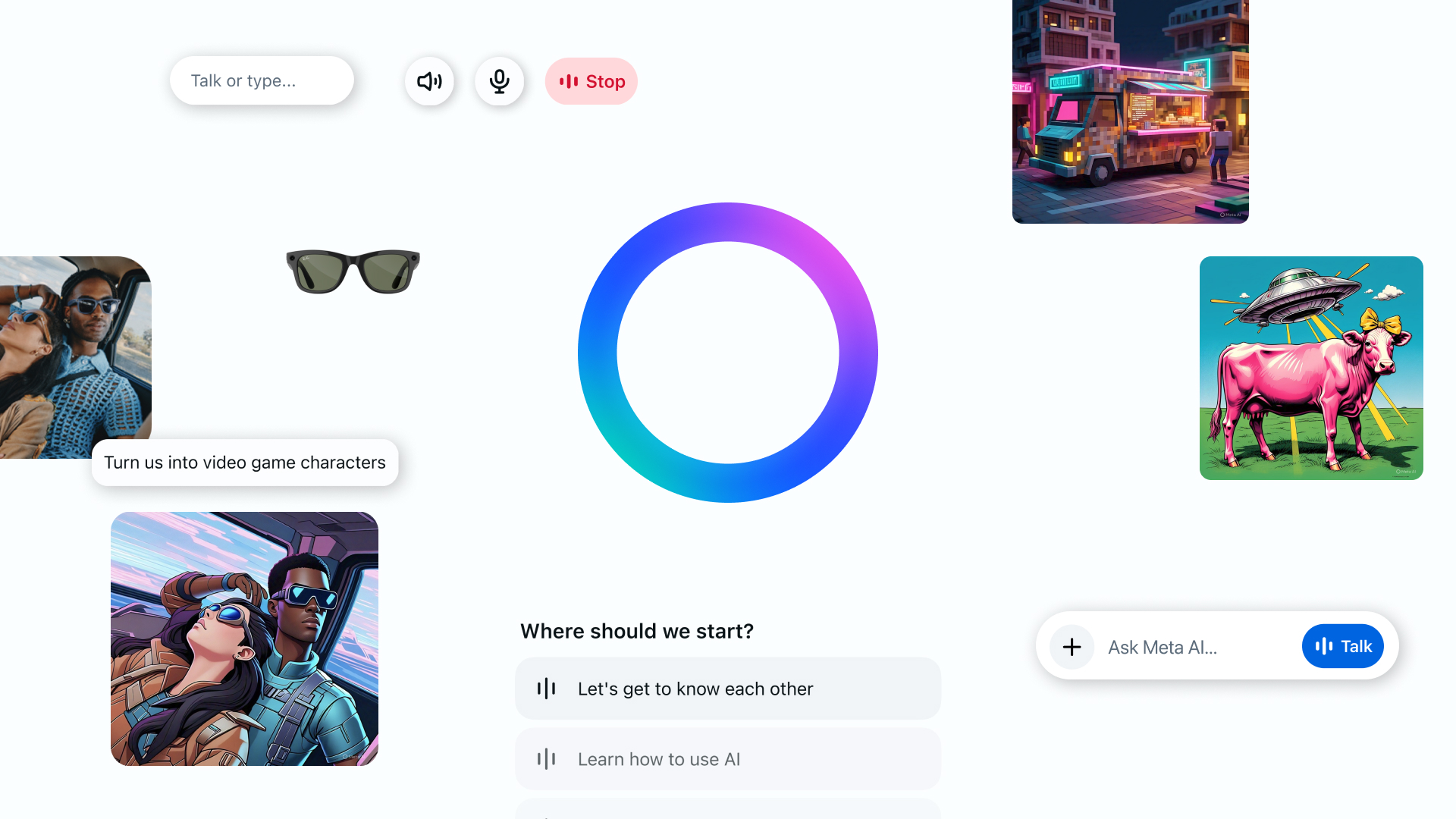

Meta has launched a new standalone Meta AI app powered by Llama 4, aiming to deliver a deeply personalized, voice-first assistant that works seamlessly across platforms. Available now in select countries, the app builds on Meta AI’s integration across WhatsApp, Instagram, Facebook, and Messenger.

Meta calls this launch “a first step toward building a more personal AI,” as it offers users the option to talk to Meta AI through natural voice conversations. The app is designed with multitasking in mind, allowing hands-free interaction while showing a clear indicator when the mic is in use.

Built with Llama 4, Designed to Feel Personal

The app leverages Llama 4 to provide more relevant, conversational responses. Unlike traditional voice assistants, Meta AI is designed to get to know you over time — adapting based on your profile, activity, and preferences from other Meta platforms. Personalized answers are currently rolling out in the US and Canada, with tighter integration for users who’ve linked their Facebook and Instagram accounts through the Meta Accounts Center.

Full-Duplex Voice Demo: A Glimpse into the Future

Users in the US, Canada, Australia, and New Zealand can try out a voice demo that uses full-duplex speech technology. This allows Meta AI to generate speech directly, bypassing traditional text-to-speech methods for a more human-like experience. Though still experimental and prone to occasional issues, it provides an early look at AI’s evolving voice capabilities.

More Than Just Voice: Visuals and Discovery

The Meta AI app supports both voice and text interactions. It also integrates advanced image generation and editing features, allowing users to craft visuals directly through conversation. The new Discover feed brings a social layer to the experience—users can browse, remix, and share creative AI prompts with others, though posting remains opt-in.

From Glasses to Desktop: A Unified AI Ecosystem

Meta AI is expanding beyond phones. The company is merging the new app with the existing Meta View companion app for Ray-Ban Meta glasses. This means users can now start conversations on their glasses and continue them via the app or web, though not in reverse. Device settings and media will auto-transfer during the app update for current Meta View users.

On the desktop, Meta AI now offers an enhanced interface for larger screens, including voice input, image generation presets, and a new document editor that supports image-rich content creation and PDF exports. Document upload and analysis are also in testing.

Privacy and Control at the Core

Meta emphasizes that users remain in control of their experience. Conversations can be started with a tap, and the “Ready to talk” setting allows users to keep voice mode always on. Importantly, nothing is shared unless users explicitly post it.

With this release, Meta is signaling a shift in how AI assistants should interact — not just functionally helpful, but socially aware, always accessible, and voice-ready.

Leave a Reply