AI Search Tools Are Misinforming Users at an Alarming Rate

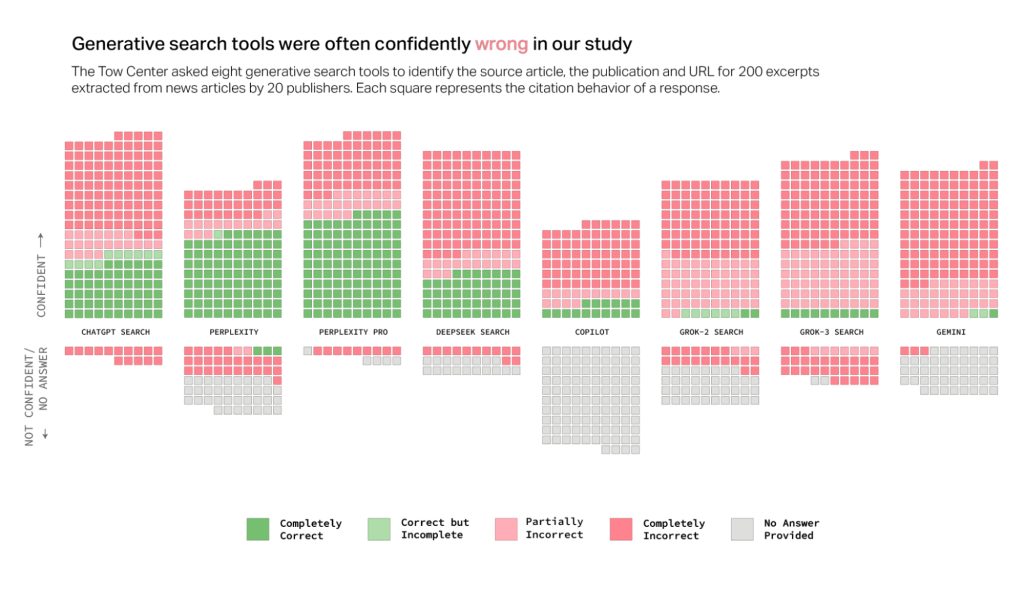

Generative AI search engines are struggling with accuracy, often providing incorrect citations and misleading information. A recent study by the Columbia Journalism Review’s (CJR) Tow Center for Digital Journalism uncovered that AI-driven search models frequently misidentify news sources, misattribute quotes, and even fabricate URLs.

Study Reveals AI Search Accuracy Issues

The study tested eight AI-powered search tools with live web access. Researchers Klaudia Jaźwińska and Aisvarya Chandrasekar ran 1,600 queries using direct excerpts from actual news articles. Their goal was to see if AI could correctly identify the article’s headline, original publisher, publication date, and source URL.

The findings were alarming. AI models got more than 60% of the answers wrong. Some performed worse than others. Perplexity AI had a 37% error rate, while OpenAI’s ChatGPT Search provided incorrect responses in 67% of cases. X’s Grok 3 fared the worst, delivering false or misleading answers 94% of the time.

Paid AI Search Models Performed Worse

Surprisingly, premium versions of AI search tools were even more unreliable. Perplexity Pro ($20/month) and Grok 3’s premium service ($40/month) had higher overall error rates because they confidently answered queries even when they lacked correct information.

Instead of declining to answer when unsure, the AI models often produced confabulations—plausible but false information. This behavior raises concerns, as more users turn to AI for quick answers without verifying facts.

Citations and Source Attribution Problems

The study also found that AI search tools often ignored publishers’ efforts to control how their content is used. Some AI models accessed paywalled articles despite publisher restrictions. For example, Perplexity’s free version correctly identified excerpts from National Geographic content, even though National Geographic had explicitly blocked its web crawlers.

Another issue was misleading citations. AI tools frequently redirected users to syndicated content rather than the original publisher’s site. This means that even when AI search engines cited sources, they often failed to drive traffic to the rightful publishers. Instead, users were taken to platforms like Yahoo News, where the same content was republished.

Broken and Fake URLs Add to the Problem

The issue doesn’t stop at misattributed content. AI search tools also created non-existent URLs. More than half of the citations from Google’s Gemini and Grok 3 led to broken or fabricated links. Out of 200 citations from Grok 3, 154 resulted in dead links, leading users to error pages instead of reliable sources.

These problems create a dilemma for publishers. If they block AI crawlers, their content may go uncredited. If they allow access, they risk AI-driven misinformation spreading while losing direct traffic.

Experts Warn of the Risks of AI Search

Mark Howard, Time Magazine’s chief operating officer, expressed concerns about AI-generated search results. He acknowledged that AI tools are improving but warned users to remain cautious.

“Today is the worst that the product will ever be,” Howard said, emphasizing ongoing improvements. However, he also noted that users should be skeptical of free AI tools, stating, “If anybody as a consumer is right now believing that any of these free products are going to be 100 percent accurate, then shame on them.”

AI Companies Respond, but Concerns Remain

OpenAI and Microsoft acknowledged the findings but did not directly address the accuracy issues. OpenAI promised to improve citation practices by providing clearer links and attributions, while Microsoft stated that it follows publisher directives and adheres to the Robot Exclusion Protocol.

Despite these assurances, the study suggests that AI search tools are not yet reliable enough for research or news discovery. Users must cross-check sources before trusting AI-generated search results.

A Personal Perspective: Why I Don’t Rely on AI for Research

While AI search tools offer convenience, they are not yet a substitute for thorough, fact-based research. As someone who values accuracy, I do not rely on AI for research or data citation. The tendency of these tools to generate misleading or incorrect information makes them unreliable for fact-finding. Instead, I prefer to verify sources manually and cross-check information from reputable publishers.

That being said, AI does have its strengths. For writing purposes, AI is a powerful tool that enhances efficiency and helps structure content. But when it comes to research and citations, nothing beats human diligence and critical thinking.

As AI evolves, improvements will surely come. But for now, users should be cautious and approach AI-generated information with a skeptical eye.